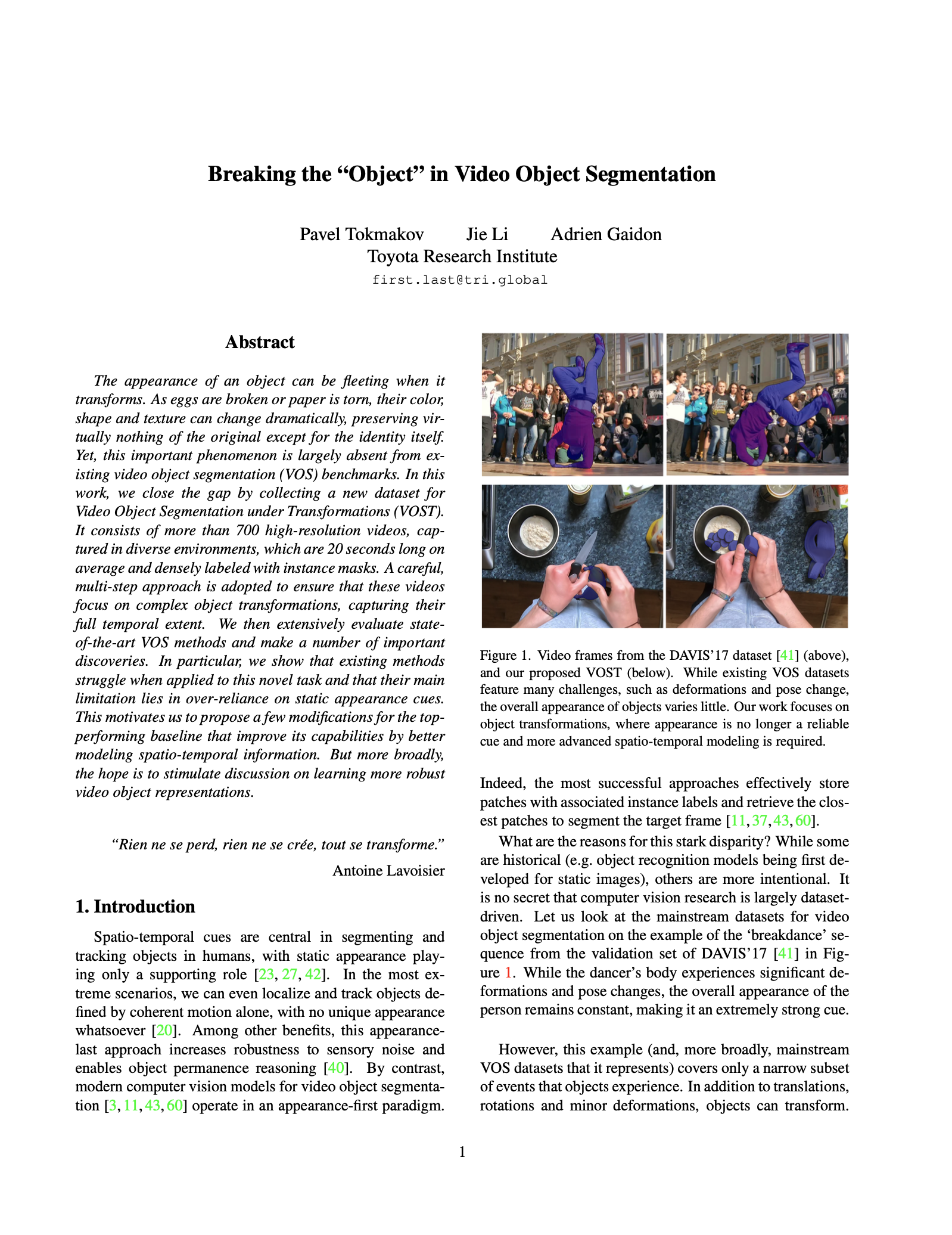

Dataset

VOST is a semi-supervised video object segmentation benchmark that focuses on complex object transformations. Differently from existing datasets, objects in VOST are broken, torn and molded into new shapes, dramatically changing their overall appearance. As our experiments demonstrate, this presents a major challenge for the mainstream, appearance-centric VOS methods.

The dataset consists of more than 700 high-resolution videos, captured in diverse environments, which are 21 seconds long on average and densely labeled with instance masks. A careful, multi-step approach is adopted to ensure that these videos focus on complex transformations, capturing their full temporal extent. Below, we provide a few key statistics of the dataset.